Скачать с ютуб Self-Attention Using Scaled Dot-Product Approach в хорошем качестве

Скачать бесплатно и смотреть ютуб-видео без блокировок Self-Attention Using Scaled Dot-Product Approach в качестве 4к (2к / 1080p)

У нас вы можете посмотреть бесплатно Self-Attention Using Scaled Dot-Product Approach или скачать в максимальном доступном качестве, которое было загружено на ютуб. Для скачивания выберите вариант из формы ниже:

Загрузить музыку / рингтон Self-Attention Using Scaled Dot-Product Approach в формате MP3:

Если кнопки скачивания не

загрузились

НАЖМИТЕ ЗДЕСЬ или обновите страницу

Если возникают проблемы со скачиванием, пожалуйста напишите в поддержку по адресу внизу

страницы.

Спасибо за использование сервиса savevideohd.ru

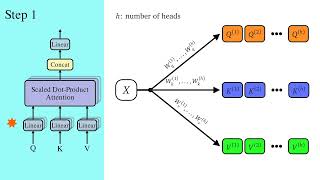

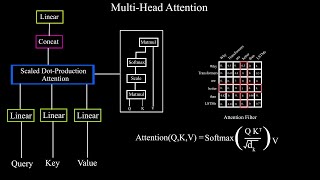

Self-Attention Using Scaled Dot-Product Approach

This video is a part of a series on Attention Mechanism and Transformers. Recently, Large Language Models (LLMs), such as ChatGPT, have gained a lot of popularity due to recent improvements. Attention mechanism is at the heart of such models. My goal is to explain the concepts with visual representation so that by the end of this series, you will have a good understanding of Attention Mechanism and Transformers. However, this video is specifically dedicated to the Self-Attention Mechanism, which uses a method called "Scaled Dot-Product Attention". #SelfAttention #machinelearning #deeplearning