Скачать с ютуб [Interview Question] How to Increase/Decrease Pod capacity on each Node inside Kubernetes Cluster. в хорошем качестве

Скачать бесплатно и смотреть ютуб-видео без блокировок [Interview Question] How to Increase/Decrease Pod capacity on each Node inside Kubernetes Cluster. в качестве 4к (2к / 1080p)

У нас вы можете посмотреть бесплатно [Interview Question] How to Increase/Decrease Pod capacity on each Node inside Kubernetes Cluster. или скачать в максимальном доступном качестве, которое было загружено на ютуб. Для скачивания выберите вариант из формы ниже:

Загрузить музыку / рингтон [Interview Question] How to Increase/Decrease Pod capacity on each Node inside Kubernetes Cluster. в формате MP3:

Если кнопки скачивания не

загрузились

НАЖМИТЕ ЗДЕСЬ или обновите страницу

Если возникают проблемы со скачиванием, пожалуйста напишите в поддержку по адресу внизу

страницы.

Спасибо за использование сервиса savevideohd.ru

[Interview Question] How to Increase/Decrease Pod capacity on each Node inside Kubernetes Cluster.

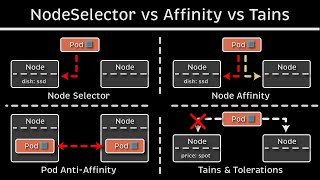

How to Increase/Decrease Pod capacity on each Node inside Kubernetes Cluster #devops #kubernetes Github Repo used : https://github.com/RohanRusta21/nodel... Official Kubernetes Documentation : https://kubernetes.io/docs/setup/best... 1. Node Allocatable Resources Each node in a Kubernetes cluster has a certain amount of CPU, memory, and storage resources. The maximum number of pods a node can run depends on these available resources. Kubernetes schedules pods based on the allocatable resources of the node (total resources minus reserved resources for the OS, kubelet, etc.). 2. Node Maximum Pod Limit (--max-pods) Each node in Kubernetes has a --max-pods parameter set in the Kubelet configuration, which specifies the maximum number of pods that can be scheduled on that node. By default, this is set to 110 for most setups, but it can be adjusted depending on your requirements. To increase or decrease the maximum pod limit: Edit the Kubelet configuration file on the node (usually found at /var/lib/kubelet/config.yaml). Update the --max-pods parameter to the desired value. Restart the kubelet service to apply the changes: systemctl restart kubelet. 3. Resource Requests and Limits When deploying pods, resource requests and limits are defined in the pod's specification. Requests determine the minimum resources the pod needs to run, while limits specify the maximum resources it can use. Adjusting these values can influence how many pods can fit on a node: Increase Pod Capacity: Lower the resource requests and limits for each pod, allowing more pods to be scheduled on a node. Decrease Pod Capacity: Increase the resource requests and limits for each pod, reducing the number of pods that a node can accommodate. 4. Cluster Autoscaler The Kubernetes Cluster Autoscaler automatically adjusts the size of a cluster (the number of nodes) based on the utilization of resources. If there is insufficient capacity to schedule new pods, the Cluster Autoscaler will add more nodes to the cluster. Conversely, if there are underutilized nodes, it will scale down the number of nodes. 5. Node Autoscaling Tools For cloud environments, node autoscaling tools like AWS Auto Scaling Groups, Google Kubernetes Engine (GKE) Node Pools, or Azure Virtual Machine Scale Sets can automatically increase or decrease the number of nodes based on metrics such as CPU, memory, or custom metrics. 6. Taints and Tolerations To control the number of pods on each node, you can use taints and tolerations. Taints on nodes prevent certain pods from being scheduled unless they have matching tolerations. This can effectively decrease the pod capacity for specific workloads while reserving nodes for particular types of pods. Summary: Modify --max-pods in the kubelet configuration to directly control the maximum number of pods per node. Adjust resource requests and limits in pod specifications to influence pod capacity indirectly. Use Cluster Autoscaler to dynamically manage node capacity based on resource usage. Use node autoscaling tools for cloud environments to manage node size and number. Apply taints and tolerations to control the type of workloads each node can handle. Follow my mentors too :@PavanElthepu @MPrashant @GouravSharma @cloudwithraj @AntonPutra @AbhishekVeeramalla @kubesimplify @kshindi @DevOpsJourney Tags : #prometheus #secrets #docker #k8s #kubernetes #cncf #rbac #serverless #grafana #autoscaling #deployment #opensource #devops #grafana #vault #terraform #kustomize #node #pods #k8scluster